FROM OUR BLOG

FROM OUR BLOG

FROM OUR BLOG

The Shifting Landscape of Music Production: How AI Tools Are Changing Creative Workflows

Dec 15, 2025

1. Rethinking the Role of Technology in Modern Music Creation

1.1 A New Phase of Digital Evolution

Music production has always moved with technological progress. Tape gave way to DAWs. Hardware synths became virtual instruments. Today, a new wave is forming. Artificial intelligence is shaping how sound is imagined, crafted, and distributed. This shift is not about replacing human creativity. It is about expanding the space where ideas can happen.

1.2 Why AI Now Matters

Streaming platforms produce massive volumes of listener data. Genres rise and fall faster than traditional studios can respond. AI models can analyze these patterns and support creators who want to respond to emerging trends. This explains why independent artists, hobbyists, and even production studios turn to AI tools to accelerate their pipelines.

2. Challenges Facing Contemporary Music Makers

2.1 Time Pressure and Content Volume

The demand for audio content has surged. Short-form video platforms require consistent sound cues. Podcasts need original beds. Gaming and livestreaming need loopable tracks. Yet traditional production cycles remain slow. Many creators struggle to meet the speed required.

2.2 Increasing Genre Fragmentation

Micro-genres—bedroom pop, drift-phonk, ambient trap, glitch folk—are multiplying. Audiences move quickly. Producers need reference points and rapid prototyping methods to keep up. Without structured analytics, creators rely on intuition alone. That is no longer enough in fast-moving digital ecosystems.

2.3 The Gap Between Skill Level and Creative Ambition

Many aspiring creators lack formal training. Learning sound design, arrangement, mixing, and mastering takes time. AI systems offer a supportive layer by automating tasks and offering starting points. This does not solve every problem, but it reduces friction.

3. The Rise of Intelligent Music Tools

3.1 A Brief Look at AI-Driven Functionality

The emergence of AI Music Generator platforms shows how machine learning can assist creators. These systems interpret prompts, genres, moods, or structural preferences. They then generate music drafts that can be refined further. This allows creators to skip the blank-page phase.

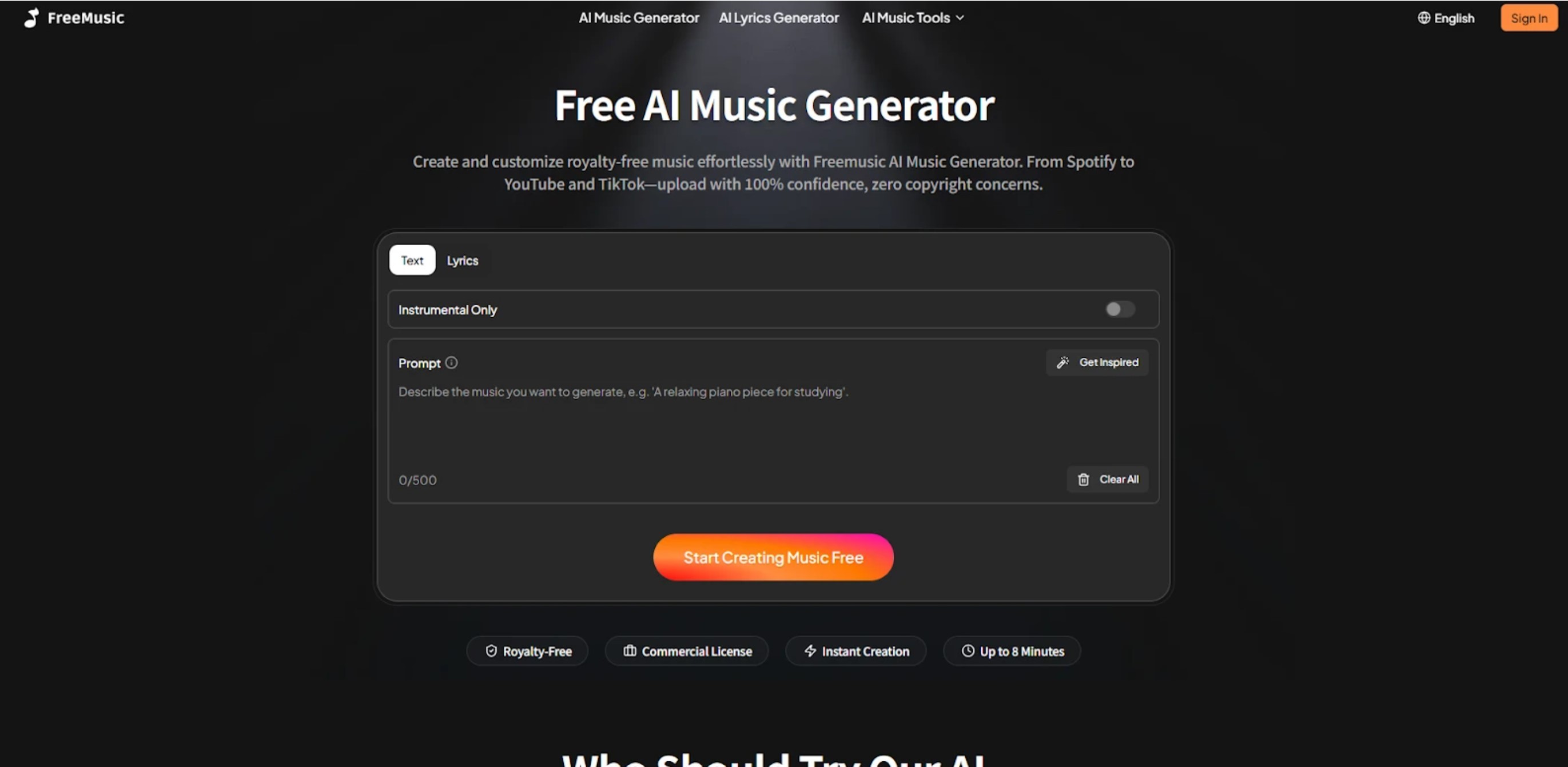

3.2 A Case in Point: How Freemusic AI Fits into the Trend

Freemusic AI is one example of a tool built around these principles. Its system analyzes genre characteristics, evolving user preferences, and structural norms found in large audio datasets. The tool provides creators with building blocks—melodic patterns, harmonic textures, rhythmic phrasing—that align with recognizable music styles. This is not replacement for human composition. It is an assistive environment where experimentation happens faster.

4. A Closer Look at Freemusic AI’s Core Capabilities

4.1 Generation Engine Based on Style Profiling

Freemusic AI synthesizes musical elements from multiple data sources. It maps relationships between rhythm, timbre, harmony, and genre identifiers. When users request a style—lofi, EDM, cinematic, ambient—the system constructs phrases that match the structural tendencies of those categories. These outcomes create starting points for editing.

4.2 Adaptive Controls and Customization

Users can shift the intensity, mood, or pacing of the generated audio. This adaptability is important because most AI-based drafts still require human refinement. Freemusic AI lets users re-shape melodies, alter instrument layers, or re-render segments. The system supports both quick idea discovery and long-form arrangement workflows.

4.3 Integrated Exporting for Digital Platforms

Creators who distribute content regularly often need compliant audio formats. Freemusic AI includes streamlined exporting options to support video editing tools, streaming platforms, and social media uploads. While simple, this feature reduces a common bottleneck for high-volume creators.

5. Putting the Tool Into Practice

5.1 A Quick Walkthrough

A creator begins by selecting a desired mood or genre. They adjust duration, energy levels, or instrument preferences. The system then outputs a draft. After listening, the user can revise, regenerate, or modify sections. The entire process can take a few minutes. This makes it useful for rapid prototyping.

5.2 Real Use Scenarios

A YouTuber who produces weekly vlogs might use the platform to test multiple background tracks before committing to a final version. An indie game developer could generate ambiance loops during early design phases. A podcaster could build transitions without outsourcing. These are practical settings where time efficiency matters.

5.3 Performance Data

Case studies from creators show measurable results. A small content team reported a 40% reduction in production hours after incorporating AI-assisted composition. Another independent creator observed higher viewer retention when using more consistent thematic audio. While effects vary by workflow, the pattern is clear. Faster iteration leads to more polished outcomes.

6. Strengths and Limitations of AI-Driven Production

6.1 Clear Advantages

AI tools accelerate idea generation. They help creators explore new genres without deep theoretical knowledge. They also reduce the cost barrier for independent artists. These systems make it easier to test structuring concepts and find new sound directions.

6.2 Constraints to Acknowledge

AI-generated music still struggles with nuanced emotional progression. Long-form arrangement often requires manual intervention. Some prompts produce predictable structural patterns. Tools like Freemusic AI provide a foundation, but the final composition depends on the user’s taste and editing decisions.

6.3 The Balance Between Automation and Artistry

The most effective workflows mix human intuition with machine support. AI handles repetition. Humans handle expression. Recognizing this division keeps expectations realistic.

7. Who Benefits Most from These Tools?

7.1 Independent Artists and Hobbyists

These users gain affordable access to tools that were once reserved for professional studios. They can experiment without constraints. AI becomes a learning companion.

7.2 Content Creators With High Volume Needs

Creators who publish frequently—short-form video producers, game designers, streamers—benefit from AI’s speed. They need consistent and adaptable audio tracks. AI systems reduce time spent searching for suitable music.

7.3 Educators, Students, and Newcomers

AI tools help learners understand structure and arrangement. Seeing how the model constructs chord progressions or rhythmic layers can reinforce music theory concepts.

8. Why These Systems Matter for the Broader Industry

8.1 Data-Driven Creativity

The integration of analytics changes how music is planned. By analyzing listener data from global platforms, AI models can detect shifts before they become mainstream. This insight helps creators target emerging styles effectively.

8.2 The Acceleration of Digital Transformation

Studios and independent producers are moving toward hybrid workflows. AI tools such as Freemusic AI show how automation supports scalable production. This is part of a larger shift in the digital audio market, where adaptive creation tools are becoming standard.

8.3 Evidence From Industry Reports

Recent research from MIDiA and IFPI shows that algorithmic discovery influences more than 60% of music streamed globally. With such a strong data footprint, producers align their creation strategies with measurable listener behavior. Tools built on pattern recognition serve this trend.

9. Concluding Perspective

AI will not replace human creativity. It will reshape how creators begin, refine, and finish their work. Tools like Freemusic AI highlight the shift toward intelligent support systems that streamline production. With more personalized controls, more accurate style modeling, and faster feedback loops, creators can focus their energy on expression rather than logistics.

The future of music production lies in collaboration—people driving ideas and machines accelerating execution. This partnership marks the next chapter in digital sound creation, and it is unfolding quickly.

import StickyCTA from "https://framer.com/m/StickyCTA-oTce.js@Ywd2H0KGFiYPQhkS5HUJ"

1. Rethinking the Role of Technology in Modern Music Creation

1.1 A New Phase of Digital Evolution

Music production has always moved with technological progress. Tape gave way to DAWs. Hardware synths became virtual instruments. Today, a new wave is forming. Artificial intelligence is shaping how sound is imagined, crafted, and distributed. This shift is not about replacing human creativity. It is about expanding the space where ideas can happen.

1.2 Why AI Now Matters

Streaming platforms produce massive volumes of listener data. Genres rise and fall faster than traditional studios can respond. AI models can analyze these patterns and support creators who want to respond to emerging trends. This explains why independent artists, hobbyists, and even production studios turn to AI tools to accelerate their pipelines.

2. Challenges Facing Contemporary Music Makers

2.1 Time Pressure and Content Volume

The demand for audio content has surged. Short-form video platforms require consistent sound cues. Podcasts need original beds. Gaming and livestreaming need loopable tracks. Yet traditional production cycles remain slow. Many creators struggle to meet the speed required.

2.2 Increasing Genre Fragmentation

Micro-genres—bedroom pop, drift-phonk, ambient trap, glitch folk—are multiplying. Audiences move quickly. Producers need reference points and rapid prototyping methods to keep up. Without structured analytics, creators rely on intuition alone. That is no longer enough in fast-moving digital ecosystems.

2.3 The Gap Between Skill Level and Creative Ambition

Many aspiring creators lack formal training. Learning sound design, arrangement, mixing, and mastering takes time. AI systems offer a supportive layer by automating tasks and offering starting points. This does not solve every problem, but it reduces friction.

3. The Rise of Intelligent Music Tools

3.1 A Brief Look at AI-Driven Functionality

The emergence of AI Music Generator platforms shows how machine learning can assist creators. These systems interpret prompts, genres, moods, or structural preferences. They then generate music drafts that can be refined further. This allows creators to skip the blank-page phase.

3.2 A Case in Point: How Freemusic AI Fits into the Trend

Freemusic AI is one example of a tool built around these principles. Its system analyzes genre characteristics, evolving user preferences, and structural norms found in large audio datasets. The tool provides creators with building blocks—melodic patterns, harmonic textures, rhythmic phrasing—that align with recognizable music styles. This is not replacement for human composition. It is an assistive environment where experimentation happens faster.

4. A Closer Look at Freemusic AI’s Core Capabilities

4.1 Generation Engine Based on Style Profiling

Freemusic AI synthesizes musical elements from multiple data sources. It maps relationships between rhythm, timbre, harmony, and genre identifiers. When users request a style—lofi, EDM, cinematic, ambient—the system constructs phrases that match the structural tendencies of those categories. These outcomes create starting points for editing.

4.2 Adaptive Controls and Customization

Users can shift the intensity, mood, or pacing of the generated audio. This adaptability is important because most AI-based drafts still require human refinement. Freemusic AI lets users re-shape melodies, alter instrument layers, or re-render segments. The system supports both quick idea discovery and long-form arrangement workflows.

4.3 Integrated Exporting for Digital Platforms

Creators who distribute content regularly often need compliant audio formats. Freemusic AI includes streamlined exporting options to support video editing tools, streaming platforms, and social media uploads. While simple, this feature reduces a common bottleneck for high-volume creators.

5. Putting the Tool Into Practice

5.1 A Quick Walkthrough

A creator begins by selecting a desired mood or genre. They adjust duration, energy levels, or instrument preferences. The system then outputs a draft. After listening, the user can revise, regenerate, or modify sections. The entire process can take a few minutes. This makes it useful for rapid prototyping.

5.2 Real Use Scenarios

A YouTuber who produces weekly vlogs might use the platform to test multiple background tracks before committing to a final version. An indie game developer could generate ambiance loops during early design phases. A podcaster could build transitions without outsourcing. These are practical settings where time efficiency matters.

5.3 Performance Data

Case studies from creators show measurable results. A small content team reported a 40% reduction in production hours after incorporating AI-assisted composition. Another independent creator observed higher viewer retention when using more consistent thematic audio. While effects vary by workflow, the pattern is clear. Faster iteration leads to more polished outcomes.

6. Strengths and Limitations of AI-Driven Production

6.1 Clear Advantages

AI tools accelerate idea generation. They help creators explore new genres without deep theoretical knowledge. They also reduce the cost barrier for independent artists. These systems make it easier to test structuring concepts and find new sound directions.

6.2 Constraints to Acknowledge

AI-generated music still struggles with nuanced emotional progression. Long-form arrangement often requires manual intervention. Some prompts produce predictable structural patterns. Tools like Freemusic AI provide a foundation, but the final composition depends on the user’s taste and editing decisions.

6.3 The Balance Between Automation and Artistry

The most effective workflows mix human intuition with machine support. AI handles repetition. Humans handle expression. Recognizing this division keeps expectations realistic.

7. Who Benefits Most from These Tools?

7.1 Independent Artists and Hobbyists

These users gain affordable access to tools that were once reserved for professional studios. They can experiment without constraints. AI becomes a learning companion.

7.2 Content Creators With High Volume Needs

Creators who publish frequently—short-form video producers, game designers, streamers—benefit from AI’s speed. They need consistent and adaptable audio tracks. AI systems reduce time spent searching for suitable music.

7.3 Educators, Students, and Newcomers

AI tools help learners understand structure and arrangement. Seeing how the model constructs chord progressions or rhythmic layers can reinforce music theory concepts.

8. Why These Systems Matter for the Broader Industry

8.1 Data-Driven Creativity

The integration of analytics changes how music is planned. By analyzing listener data from global platforms, AI models can detect shifts before they become mainstream. This insight helps creators target emerging styles effectively.

8.2 The Acceleration of Digital Transformation

Studios and independent producers are moving toward hybrid workflows. AI tools such as Freemusic AI show how automation supports scalable production. This is part of a larger shift in the digital audio market, where adaptive creation tools are becoming standard.

8.3 Evidence From Industry Reports

Recent research from MIDiA and IFPI shows that algorithmic discovery influences more than 60% of music streamed globally. With such a strong data footprint, producers align their creation strategies with measurable listener behavior. Tools built on pattern recognition serve this trend.

9. Concluding Perspective

AI will not replace human creativity. It will reshape how creators begin, refine, and finish their work. Tools like Freemusic AI highlight the shift toward intelligent support systems that streamline production. With more personalized controls, more accurate style modeling, and faster feedback loops, creators can focus their energy on expression rather than logistics.

The future of music production lies in collaboration—people driving ideas and machines accelerating execution. This partnership marks the next chapter in digital sound creation, and it is unfolding quickly.

import StickyCTA from "https://framer.com/m/StickyCTA-oTce.js@Ywd2H0KGFiYPQhkS5HUJ"

More Update

import StickyCTA from "https://framer.com/m/StickyCTA-oTce.js@Ywd2H0KGFiYPQhkS5HUJ"